Fitting Agentic AI Components in a Mental Model

Modern LLMs are unlike any other software in their flexibility and breadth of application. But that same open-endedness makes them incredibly difficult to engineer against.

In traditional software, we code for specific inputs and predictable outputs. With an LLM, the input is anything and the output is non-deterministic. Variance is manageable. But the fact that the output can be confidently false is the real barrier to production.

Why the System Matters

We are realizing that to make these models useful, we have to constrain them.

This aligns with the shift toward “Agentic Workflows” popularized by Andrew Ng back in 2024. He famously showed that an older model (GPT-3.5) wrapped in a loop: where it could critique and fix its own code: actually outperformed a state-of-the-art model (GPT-4) that only got one shot. Read more

The intelligence provides the capability, but the system provides the reliability.

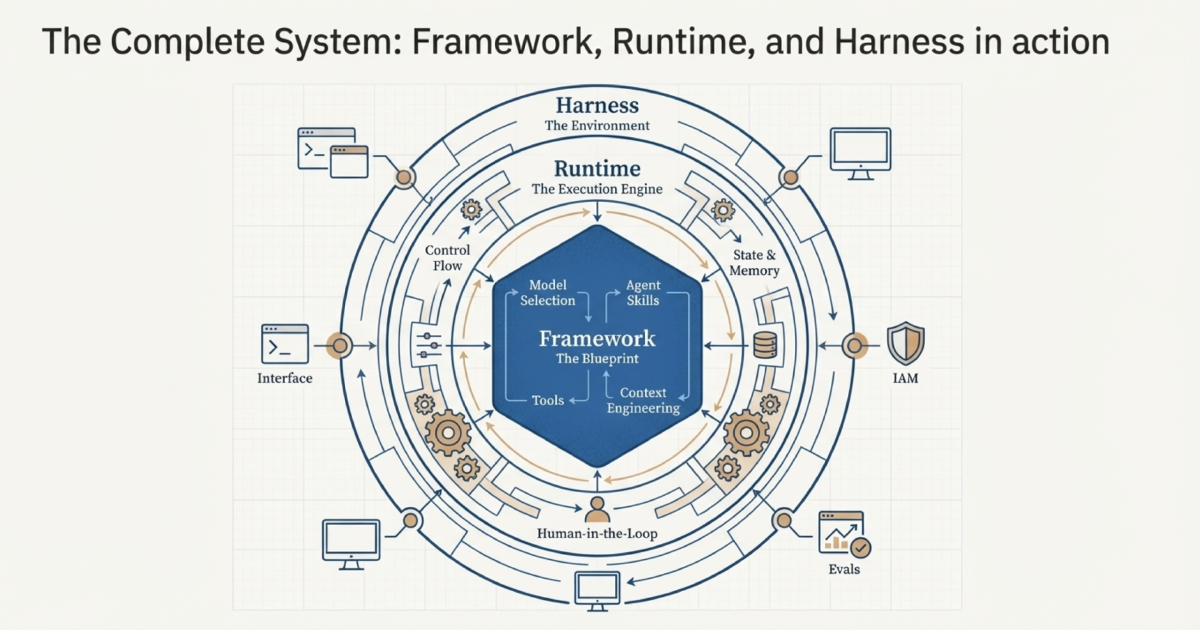

Let’s try to map all this exciting stuff. Inspired by the Framework, Runtime, and Harness terms that LangChain proposed to categorize the emerging stack here:

1. Framework (The Blueprint)

This defines what the agent is.

- Model Selection: Choosing the right model and configuring the parameters

- Context Engineering: Architecting the “Global State” by managing window size and compression to ensure the model sees what it needs

- Agent Skills: The modern replacement for long system prompts. Instead of dumping all rules at once, we package instructions into modular Skills that are loaded dynamically only when needed

- Tools: The functions the agent can call to interact with the world (e.g. MCP)

- Abstraction: The code that decouples your logic from the specific provider to prevent vendor lock-in

2. Runtime (The Execution)

This manages how the agent runs.

- State & Memory: Handling persistence so the agent can resume if it crashes

- Control Flow: The loop itself. It manages retries and prevents infinite loops

- Human-in-the-Loop: Freezing the state so a human can approve a plan before execution

3. Harness (The Environment)

This describes where the agent lives.

- Interface: The connection to the real world (IDE, Browser, etc.)

- IAM: Context-Aware Permissions. E.g. we don’t just check if an agent can read a file; we check if it makes sense for the current task

- Evals: Running simulated scenarios to grade reliability and catch regressions before deployment

The Summary

We are effectively moving from Prompt Engineering to System Engineering

This brought me some order to the chaos. This abstraction can’t fit everything, & my mental model is still evolving, but curious to hear what I’m missing!

I built a framework for adopting agentic AI with trust at the center, with interactive explainers on the protocols behind it. I also run a live training programme on this at trustedagentic.ai.

Stay in the loop

A few times per month at most. Unsubscribe with one click.

Your email will be stored with Buttondown and used only for this newsletter. No tracking, no ads.